Forum tip: Always check when replies were posted. Technology evolves quickly, so some answers may not be up-to-date anymore.

-

Paul Naish

0I am using CB Desktop on Windows 10 to a folder in an AWS bucket. This is a new backup.

Paul Naish

0I am using CB Desktop on Windows 10 to a folder in an AWS bucket. This is a new backup.

When I was running it, I found it was picking up files that had not changed. For example, one file it picked up CB said the Modification Date was "2020-07-11 19:21:32" the directory listed the modification date as "2019-09-21 7:21PM". The create date on W10 was even earlier.

I've compared this backup to others and the only difference is that this one goes to a AWS Folder in a Bucket as opposed to a Bucket directly.

What am I missing? -

David Gugick

118When you say "the directory listed the modification date," what directory do you mean? You mention Windows 10 right after, so I was unclear about the previous sentence.

David Gugick

118When you say "the directory listed the modification date," what directory do you mean? You mention Windows 10 right after, so I was unclear about the previous sentence.

Have you checked backup storage to see what is there for that file (Use the Storage Tab to find it and see if there are multiple versions) and see if it matches what is in the source folder?

If you're still have trouble reconciling the backup, please post some screenshots from the source folder and Backup History with the file in question. Thanks. -

Paul Naish

0Hi David. Sorry for the confusion. I created the following to show what I'm observing. It looks like CB is out of sync with AWS and thinks files have not been saved yet.Attachment

Paul Naish

0Hi David. Sorry for the confusion. I created the following to show what I'm observing. It looks like CB is out of sync with AWS and thinks files have not been saved yet.Attachment Keeper Backup Problems

(288K)

Keeper Backup Problems

(288K)

-

Paul Naish

0I found this article and ran the consistency check. I received a number of errors like the following.

Paul Naish

0I found this article and ran the consistency check. I received a number of errors like the following.

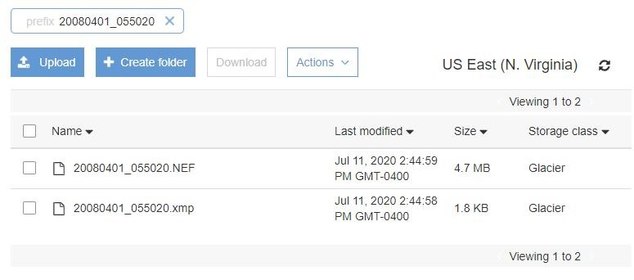

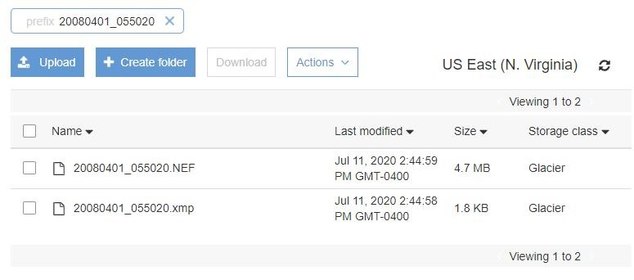

Type File Size Result Finish Time Duration Modification Date Error Message

ConsistencyCheck Keepers\K:\Keeper\20080401_055020.NEF 4924153 Failed 2020-07-18 10:00:22 0 2019-03-09 08:30:45 File was not found in backup storage - resolved

However, when I check the AWS console, 20080401_055020.NEF is in the AWS folder (See attached).

Not sure about next steps.Attachment Capture

(39K)

Capture

(39K)

-

David Gugick

118This could be a Glacier. What's your lifecycle policy for moving the data into Glacier or are you backing up directly to Glacier? I'll have to reach out to the team to see if they have any ideas but Glacier - depending on how it's used, can be difficult with some operations as there are delays in getting data ready for use and to be read.

David Gugick

118This could be a Glacier. What's your lifecycle policy for moving the data into Glacier or are you backing up directly to Glacier? I'll have to reach out to the team to see if they have any ideas but Glacier - depending on how it's used, can be difficult with some operations as there are delays in getting data ready for use and to be read. -

Paul Naish

0I next found this article and ran Repository Sync.

Paul Naish

0I next found this article and ran Repository Sync.

I then ran the prescribed cbb.exe account -s "your_account_name" -custom "folder_name" which said was needed since I was backing up to a custom folder.

I'm rerunning the backup now and from all indications, it's only picking up new/changed files. It will take a day or so still to complete. -

Paul Naish

0No joy. I started to backup files that were already there.

Paul Naish

0No joy. I started to backup files that were already there.

I found the following in one of the links above. Is this likely causing my problem and if so, how would I migrate KEEPERS from a FOLDER to BUCKET.

"When you backing up files using a custom mode, the backup service copies these files to a specified folder in the target storage as is, without storing any meta data, such as information about file versions and their modification dates." -

David Gugick

118A couple questions:

David Gugick

118A couple questions:

1. Why are you using Custom Mode? Is there some use case you are looking to solve? If not, you should use Advanced Mode as that mode gives you the greatest control over backup data, versions, retention, etc.

2. Why are you using Glacier? Glacier is for long-term, archival data - data that you do not plan to access, but need to have it saved just in case. Restoring data in Glacier can be expensive and can take hours to get the data ready for a restore. Also, if you back up to Glacier directly, then it's much more difficult to get a file listing in a timely fashion. You also have a 90 day retention requirement in Glacier - meaning, if you delete your data before 90 days, you are charged the remaining days by Amazon. But, if you want to simply back up this data once and keep it for years or you have data that you simply need to keep long term, then Glacier is a good solution - although I would still recommend you back up to S3 - Standard and move the data to Glacier using a lifecycle policy.

So, to help you, I need to better understand what your needs are with this data today and going forward. And then I can recommend the best solution for your needs. -

Paul Naish

01. Not particular use of Custom Mode which is simply referred to as Folder in AWS parlance. It seemed an easy way to create a new storage area.

Paul Naish

01. Not particular use of Custom Mode which is simply referred to as Folder in AWS parlance. It seemed an easy way to create a new storage area.

2. I went to Glacier when it came out because it was cheaper and it is for data that I will not access unless I need a restore.

At the moment I'm only spending a couple of bucks a month on AWS. -

David Gugick

118If you're simply looking to save on storage and would rather not deal with the side-effects of using archive storage tiers like Glacier, then I'd recommend you look for hot cloud storage that comes at a less expensive price. Wasabi and Backblaze B2 come to mind - they are both about $6 / TB and the data is available in real-time. Wasabi may have a retention requirement, but has no API or data egress charges. Backblaze B2 has low API charges, but no retention.

David Gugick

118If you're simply looking to save on storage and would rather not deal with the side-effects of using archive storage tiers like Glacier, then I'd recommend you look for hot cloud storage that comes at a less expensive price. Wasabi and Backblaze B2 come to mind - they are both about $6 / TB and the data is available in real-time. Wasabi may have a retention requirement, but has no API or data egress charges. Backblaze B2 has low API charges, but no retention.

But if you want to stick with Glacier, then it would probably be easier if you backed up to S3 - Standard and used a Lifecycle Policy to move the data to S3 Glacier - even if you did that after 0 days - but preferably after some short period where you might need a restore or you think an old version might need to be removed by the MSP360 Backup and you do not want to be charged for the 90 day retention.

Custom Mode is not the best choice for what you need. Please consider using Advanced Mode as you can compress and encrypt the data for safe-keeping and it will allow you to restore to the original folders, if needed. -

Paul Naish

0Thanks. I'm not about to incur the network costs of moving storage providers. I don't have an unlimited plan. As I've already mentioned, my costs with AWS are current cheap.

Paul Naish

0Thanks. I'm not about to incur the network costs of moving storage providers. I don't have an unlimited plan. As I've already mentioned, my costs with AWS are current cheap.

But lets back to my problem. With Custom/Folder it does not seem to remember what is backed up there. As noted above from your documentation "When you backing up files using a custom mode, the backup service copies these files to a specified folder in the target storage as is, without storing any meta data, such as information about file versions and their modification dates.". Unfortunately, I do not believe this is noted when you select Custom.

So, if the problem is with how CB supports AWS folders through Custom, can I move the contents of my folder to an AWS Bucket and sync so that CB Backup understands what files have been backed up? -

David Gugick

118You can't move the files. There is no such thing as a move in Glacier anyway. The best way to correct this is to switch to advanced mode and back up the files again to Glacier.

David Gugick

118You can't move the files. There is no such thing as a move in Glacier anyway. The best way to correct this is to switch to advanced mode and back up the files again to Glacier.

I'm not sure what you're referring to with network costs related to moving storage providers. But I'll reiterate that backing up to Glacier is not ideal. We do support it with the stand-alone products, but do not support it with our MSP-focused products. If cheap is what you are after, I think you'll have a much better experience using something like Wasabi or Backblaze B2. But as I stated above, if you want to keep Glacier, create a new backup plan and switch the type to advanced and back up the files again. Once that is complete and you are sure you will not need the files you currently have backed up, you can delete the old files from the Storage tab in the client. -

Paul Naish

0David, if I change storage providers, I have to upload my files again. My home account with my ISP has a monthly ceiling for uploads. I'd blow that and be charged $ for the extra GB's.

Paul Naish

0David, if I change storage providers, I have to upload my files again. My home account with my ISP has a monthly ceiling for uploads. I'd blow that and be charged $ for the extra GB's.

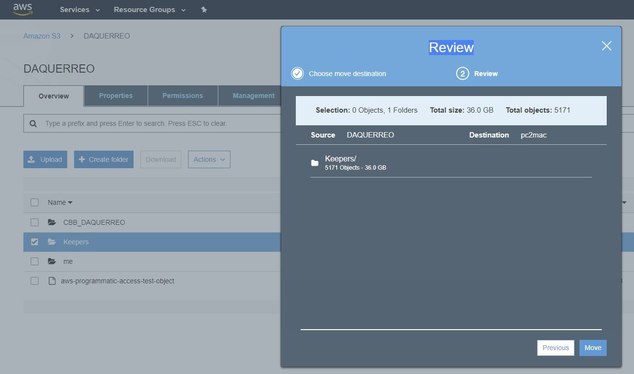

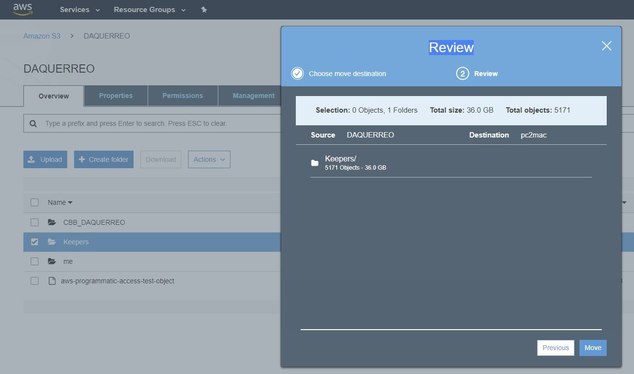

I'm not sure what you mean there is no MOVE in GLACIER. I just went into the AWS Console and it let me select the KEEPERS folder in one bucket for a MOVE to another bucket. See attached

So, if AWS let's me move my 36GB of files from KEEPERS to a new bucket, can CB Backup sync with this new bucket if I retain the folder directory structure?

ThanjsAttachment Capture 1

(66K)

Capture 1

(66K)

-

David Gugick

118Yes, but only if you keep the same backup type. If you change to Advanced that’s not possible. The backup format is proprietary. I think you need to explain again what you need? Is it a desire to move to Advanced mode but retain the backups already made with Custom mode?

David Gugick

118Yes, but only if you keep the same backup type. If you change to Advanced that’s not possible. The backup format is proprietary. I think you need to explain again what you need? Is it a desire to move to Advanced mode but retain the backups already made with Custom mode? -

Paul Naish

0Thanks David. I'll play around with it.

Paul Naish

0Thanks David. I'll play around with it.

I am not planning to go to Advanced for the type of backup I need. Using Advanced binds my AWS storage to using Cloudberry to access it. Simple allows me to use other tools such as AWS CLI.

This KEEPERS storage is for photo's I've identfied as 'KEEPERS'. A subset of my entire photo library which is impractical to store in the cloud. I have multiple local and off-site backups for the entire library. I have program that runs and identifies those I've flagged with a least one star. These are copied to a local disk which is then copied to AWS. -

David Gugick

118I understand. Simple just copies the files and would allow you to access with another product. It's possible that some files were changed internally. If you think that the product is still picking up file changes incorrectly, then I would need you to open a support case using the Tools | Diagnostic toolbar option. Thanks.

David Gugick

118I understand. Simple just copies the files and would allow you to access with another product. It's possible that some files were changed internally. If you think that the product is still picking up file changes incorrectly, then I would need you to open a support case using the Tools | Diagnostic toolbar option. Thanks.

Welcome to MSP360 Forum!

Thank you for visiting! Please take a moment to register so that you can participate in discussions!

Categories

- MSP360 Managed Products

- Managed Backup - General

- Managed Backup Windows

- Managed Backup Mac

- Managed Backup Linux

- Managed Backup SQL Server

- Managed Backup Exchange

- Managed Backup Microsoft 365

- Managed Backup G Workspace

- RMM

- Connect (Managed)

- Deep Instinct

- CloudBerry Backup

- Backup Windows

- Backup Mac

- Backup for Linux

- Backup SQL Server

- Backup Exchange

- Connect Free/Pro (Remote Desktop)

- CloudBerry Explorer

- CloudBerry Drive

More Discussions

- aws lifecycle creation date versus modified date

- Maintain Date Modified between source (server) and destination (Azure)

- Cloudberry backup on One Drive - Searching modified files taking long time (3 day) and not completed

- To generate date folder before backup perform and place backup in date folder that generate

- Terms of Service

- Useful Hints and Tips

- Sign In

- © 2025 MSP360 Forum