Forum tip: Always check when replies were posted. Technology evolves quickly, so some answers may not be up-to-date anymore.

Comments

-

restore version (is it possible to choose from the available backup dates?)The idea is not to select files and version for each file but the opposite: select version (backup date) and for that backup date (and time) select the folders/files.

But anyway, if it is not on CB roadmap let's get the best out of what is here :D

Thanks for your followup David. Have a nice day -

restore version (is it possible to choose from the available backup dates?)Hello David

Use case is: the content of a folder is corrupted (virus/crypto, hardware problem, software malfunction...) so the backup did backup and replace the files/subfolders with the corrupted ones...

Don't forget that in this case you do not see in realtime that there is a problem with you files.

Also the backup continues after the trouble and the files are backedup in a corrupted state

The problem, most of the time you do not really know when it happened so you make tries to get closer.

With a list of backups available and sorted by date/time, you make tries, you choose a date/time and sees if the restored files are healthy, if yes, you do another try with an older backup until you reach the corrupt version. Than you know you have to move one step back and you are sure you have the very last good version.

When you try to restore with systems based on a blind "last version" 1st you will restore corrupted files and 2nd if you try to go back in time, you never know if you have the last good version or just a good version.

I think it's the point, how do you know you have the last good version or just a good version? -

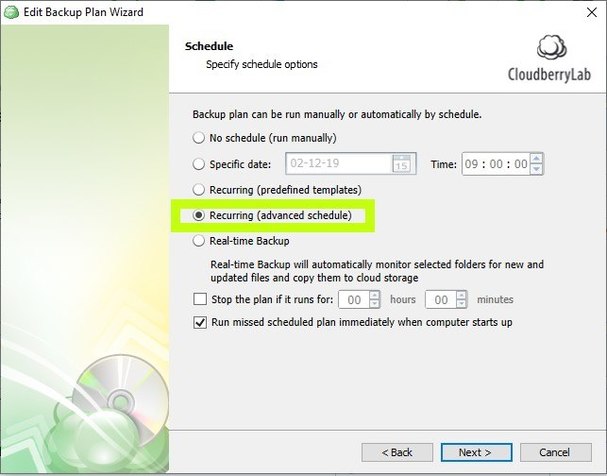

Near Continuous Data Protection (Near CDP) - where is the option to enable it ?I've done this in the past, support told me not to use realtime function. After announce of the new realtime I gave it a try again but same behaviour.

I will wait for gfs and disc destination rotation function to come to make new tests.

Thanks Matt -

restore version (is it possible to choose from the available backup dates?)Thanks David,

I've seen those options but it seems those options automatically select "the last/closest backup" without the possibility to choose precise backup dates. I mean, I can choose a range of dates but I would prefer to choose backup dates (more precise when you have to do several tries).

Thanks anyway David, have a nice day -

restore version (is it possible to choose from the available backup dates?)Good answer!

Do you have a solution when the content of a folder is corrupted (virus/crypto, hardware problem, software malfunction...) so the backup did backup and replace the files/subfolders with the corrupted ones... -

restore version (is it possible to choose from the available backup dates?)Not only files, folders, subfolders and files within.

For exemple, let's say I deleted by mistake a folder at 05.50 o' clock on month ago and I backup every hours, I can restore the folder at 5 o'clock... (instead of choosing file by file or getting the last backup (wich is too late that day) -

restore version (is it possible to choose from the available backup dates?)Hello David,

Thanks for your feedback, it was so obvious... thanks.

Do you know if it is planned to have an option that shows all files and folders at a certain date (and before of course)?

Thanks -

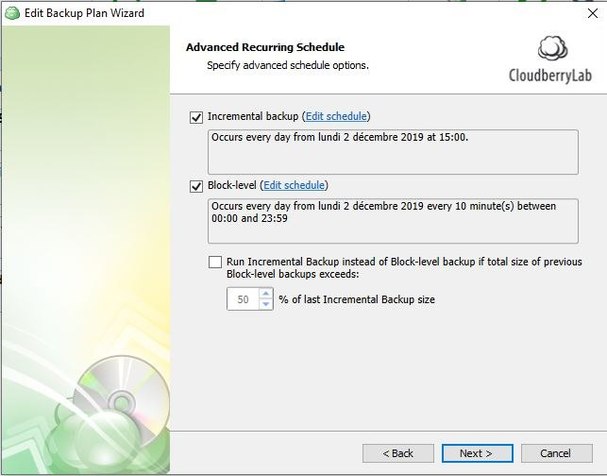

Near Continuous Data Protection (Near CDP) - where is the option to enable it ?Yes,

After startup realtime backup is backing up fine but it doesen't long...

Exemple: I upload a new file + I add a new file into a zip file, realtime backup do not backup those files. I wait 2 times instant backup cycle but the files are not backed up.

If I reboot the machine, the files are backed up just after reboot -

Scheduled Backup (block level) duplicate files when modified instead of just adding blocksThanks David,

Wish you good time for new year and marry christmas! -

Scheduled Backup (block level) duplicate files when modified instead of just adding blocks

David, after my tests I'm wondering what is the purpose of blocklevel backup... It does not seems interesting for pictures, mp3, videos, office documents, zip files...

In what major case is it interesting?

Thanks for sharing your view -

Scheduled Backup (block level) duplicate files when modified instead of just adding blocksHello again David,

Yes, I tried with xlsx and docx and confirm your informations.

I will try other files including your option with pst file.

Nice for the zip extension on office files, I wil give a try...

Have a nice day/evening/Week end David -

Scheduled Backup (block level) duplicate files when modified instead of just adding blocksHello David,

Thanks for this information!

I will do testing with .xlsx or .docx and see if it can help fo my testing...

Thanks again for your help, I would never have thought about file compression of ODS and the consequences of compression on file structure even at block level! Thanks for your help -

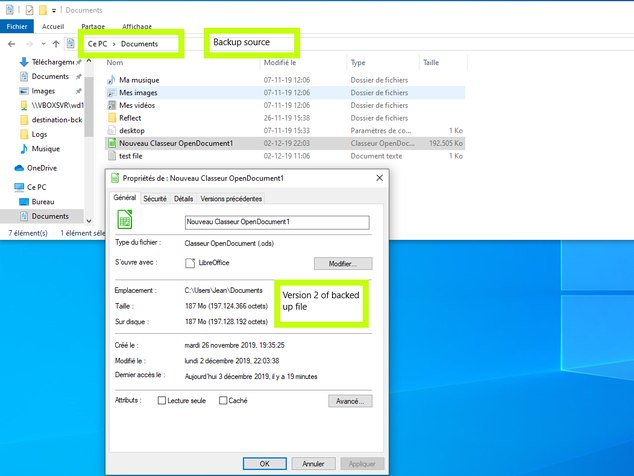

Scheduled Backup (block level) duplicate files when modified instead of just adding blocksHello,

A few more informations

Logs:

2019-12-02 21:51:55,406 [PL] [1] INFO - File C:\Users\Jean\Documents\Nouveau Classeur OpenDocument1.ods. Full backup will be created. Parts modified 1411 of 1411 2019-12-02 21:51:55,426 [CL] [1] INFO - Item generated: \\?\GLOBALROOT\Device\HarddiskVolumeShadowCopy85\Users\Jean\Documents\Nouveau Classeur OpenDocument1.ods 2019-12-02 21:51:55,431 [PL] [1] INFO - Command added: Upload operation. Cloud path: E:\destination-bck\CloudBerryBackup\CBB_DESKTOP-SSQ2NJI\C$\Users\Jean\Documents\Nouveau Classeur OpenDocument1.ods$\20191202200305\Nouveau Classeur OpenDocument1.ods. IsSimple: False. Modified date: 02/12/2019 20:03:05. Size: 176 MB (184939671) ... 2019-12-02 21:52:09,644 [PL] [11] INFO - Command completed: Upload operation. Cloud path: E:\destination-bck\CloudBerryBackup\CBB_DESKTOP-SSQ2NJI\C$\Users\Jean\Documents\Nouveau Classeur OpenDocument1.ods$\20191202200305\Nouveau Classeur OpenDocument1.ods. IsSimple: False. Modified date: 02/12/2019 20:03:05. Size: 176 MB (184939671) 2019-12-02 21:52:09,960 [PL] [11] INFO - Update history file called again: C:\Users\Jean\Documents\Nouveau Classeur OpenDocument1.ods 2019-12-02 21:52:09,964 [PL] [11] INFO - 6 files copied (total size: 176 MB): C:\Users\Jean\Documents\Nouveau Classeur OpenDocument1.ods 2019-12-02 21:52:09,967 [CL] [11] INFO - Running items count: 0, Awaiting: 0, Paused: 0, NA: 0, Failed: 0, SuccessCount: 0, WarningCount:0, 0 In progress count: 0, Idly Running count: 0 2019-12-02 21:52:09,968 [PL] [11] INFO - Entering sleeping mode event in CommandQueue occurred 2019-12-02 21:52:09,971 [PL] [11] INFO - Queue completed event fired 2019-12-02 21:52:09,972 [PL] [11] INFO - Upload queue is empty. Search thread is finished 2019-12-02 21:52:09,977 [PL] [11] INFO - Delete temporary parts. Time: 00:00:00.0031182 2019-12-02 21:52:10,776 [PL] [11] INFO - Setting waiting for complete 2019-12-02 21:52:10,777 [PL] [1] INFO - Backup engine wait completed 2019-12-02 21:52:11,790 [SERV] [1] INFO - Upload thread finished 2019-12-02 21:52:11,798 [SERV] [1] INFO - ImportFinalizing Start 2019-12-02 21:52:11,806 [SERV] [1] INFO - ImportFinalizing End 2019-12-02 21:52:11,899 [PL] [1] NOTICE - Creating purge queue started. Purge conditions: version count: 7, delete topurge elements 2019-12-02 21:52:11,906 [PL] [1] INFO - Files for purge with versions found: 9 2019-12-02 21:52:11,944 [PL] [1] INFO - Folder markers for purge found: 0 2019-12-02 21:52:11,948 [PL] [1] NOTICE - Purge version search time: 00:00:00. Files checked for this plan: 4. Files for purge found: 0 ...... 2019-12-02 22:11:48,041 [PL] [1] INFO - Item contains explicit acl records: C:\Users\Jean\Documents 2019-12-02 22:11:48,063 [PL] [1] INFO - Item contains explicit acl records: C:\Users\Jean\Documents\Reflect 2019-12-02 22:11:48,076 [PL] [1] INFO - Starting MD5 calculation for Nouveau Classeur OpenDocument1.ods. Size 188 MB (197124366) 2019-12-02 22:11:51,917 [PL] [1] INFO - Inserting 1504 parts. Time: 00:00:00.4403088 2019-12-02 22:11:51,930 [PL] [1] INFO - File C:\Users\Jean\Documents\Nouveau Classeur OpenDocument1.ods. Full backup will be created. Parts modified 1504 of 1504 2019-12-02 22:11:52,005 [CL] [1] INFO - Item generated: \\?\GLOBALROOT\Device\HarddiskVolumeShadowCopy90\Users\Jean\Documents\Nouveau Classeur OpenDocument1.ods 2019-12-02 22:11:52,079 [PL] [1] INFO - Command added: Upload operation. Cloud path: E:\destination-bck\CloudBerryBackup\CBB_DESKTOP-SSQ2NJI\C$\Users\Jean\Documents\Nouveau Classeur OpenDocument1.ods$\20191202210338\Nouveau Classeur OpenDocument1.ods. IsSimple: False. Modified date: 02/12/2019 21:03:38. Size: 188 MB (197124366) 2019-12-02 22:11:52,090 [CL] [12] INFO - The queue worker thread 12 started. ... 2019-12-02 22:11:52,499 [PL] [1] INFO - Files to upload count: 1, Data size: 197124366 (188 MB) 2019-12-02 22:11:52,499 [SERV] [1] INFO - Main thread. Waiting for upload complete event 2019-12-02 22:12:06,536 [PL] [6] INFO - Command completed: Upload operation. Cloud path: E:\destination-bck\CloudBerryBackup\CBB_DESKTOP-SSQ2NJI\C$\Users\Jean\Documents\Nouveau Classeur OpenDocument1.ods$\20191202210338\Nouveau Classeur OpenDocument1.ods. IsSimple: False. Modified date: 02/12/2019 21:03:38. Size: 188 MB (197124366) 2019-12-02 22:12:06,812 [PL] [6] INFO - Update history file called again: C:\Users\Jean\Documents\Nouveau Classeur OpenDocument1.ods 2019-12-02 22:12:06,820 [PL] [6] INFO - 1 files copied (total size: 188 MB): C:\Users\Jean\Documents\Nouveau Classeur OpenDocument1.ods 2019-12-02 22:12:06,843 [CL] [6] INFO - Running items count: 0, Awaiting: 0, Paused: 0, NA: 0, Failed: 0, SuccessCount: 0, WarningCount:0, 0 In progress count: 0, Idly Running count: 0 2019-12-02 22:12:06,848 [PL] [6] INFO - Entering sleeping mode event in CommandQueue occurred ...

I wish it will help

Thanks for your help and questionsAttachments 04

(155K)

04

(155K)

06

(51K)

06

(51K)

0707

(48K)

0707

(48K)

-

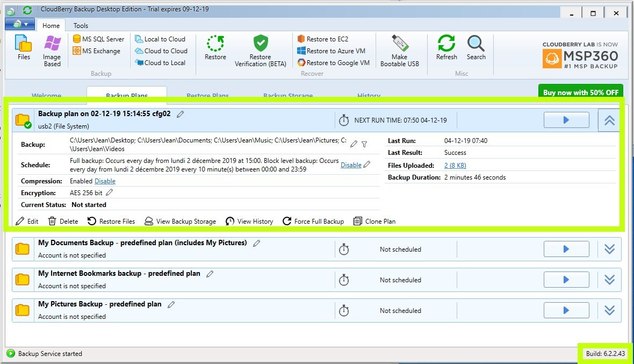

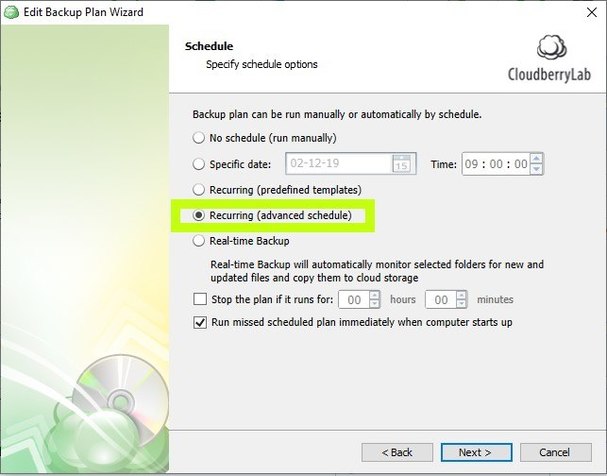

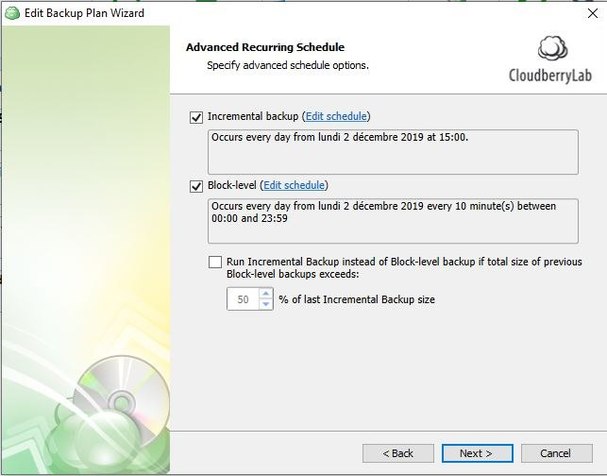

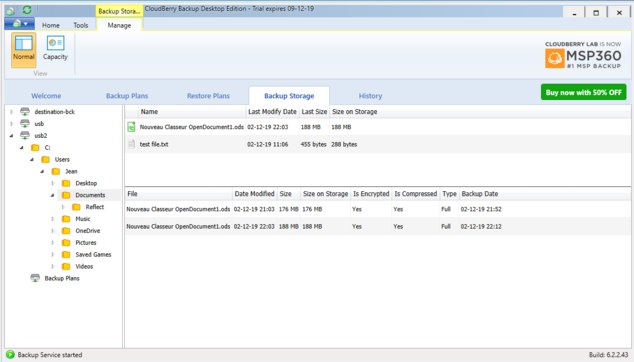

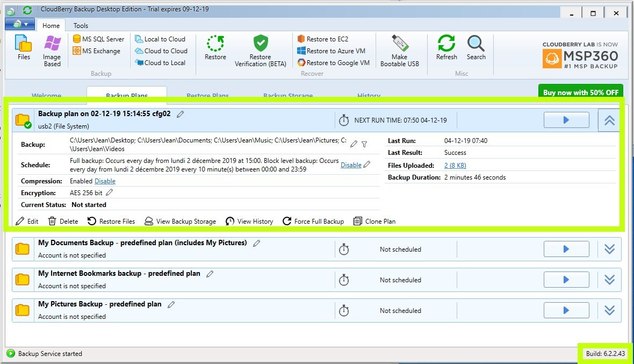

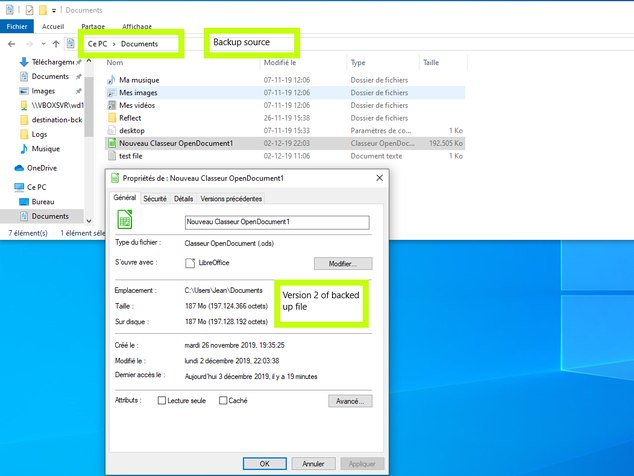

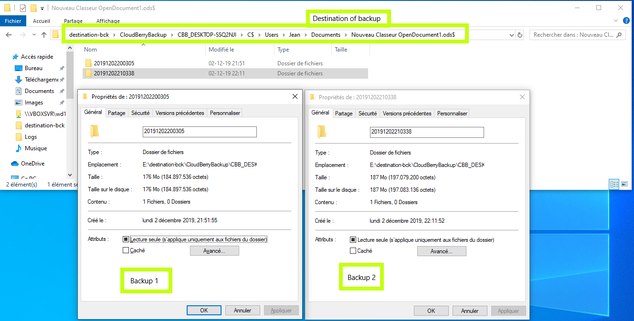

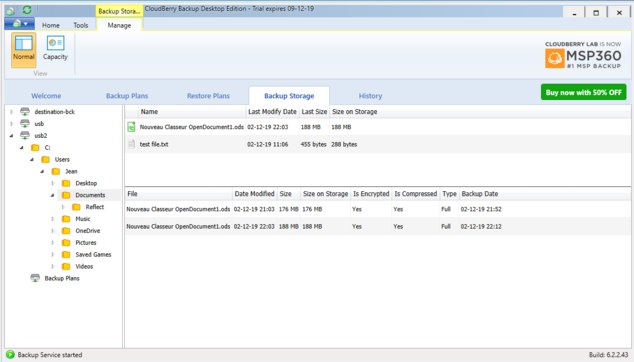

Scheduled Backup (block level) duplicate files when modified instead of just adding blocksHello,

3 screens:

01 source of backup

02 backup destination (explorer view)

03 backup destination (cloudberry bakup > backup storage view)

Are those the ones you asked for?

Thanks for helping me to understand and if possible adjust.Attachments 01

(92K)

01

(92K)

02

(140K)

02

(140K)

03

(70K)

03

(70K)

-

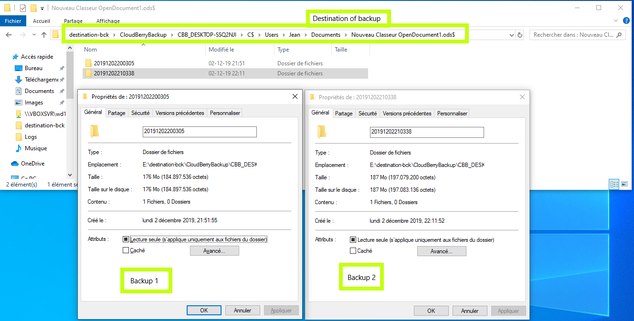

Scheduled Backup (block level) duplicate files when modified instead of just adding blocksHello Anton,

- The newly modified (not created) object is about 200Mo. (Don't forget I have not checked the box about incremental backup when x% of file is modified).

- How do I know it's not a change block: I go to the backup destination, browse folders until the "my documents", then the folder containing the file versions... There are 2 folders: the first correspond to the file before modification (+/- 150Mo) and the other folder (+/- 200Mo)

Thanks -

Realtime file backup on windows, moving file do not seems to be taken into account ?So a moved file on the source is deleted on destination and then re-uploaded to the "new" destination?

Is there a mechanism in order to avoid file duplication on destination?

Thx -

Realtime file backup on windows, moving file do not seems to be taken into account ?

Hello Matt,

First, thanks for taking my case.

0) about roaming folder, I noticed these "excessives file movements" so I will follow your advise: right now I'm doing a POC with cloudberry, I backed up the entire profile. I will try to exclude folders and/or restrict to necessary folders.

1) OK, let's see if reducing the number of backed up folders changes something

2) Thanks for information

3) What I mean in other words:

when I move a file from "desktop" to "my documents" on the backed up computer, what it seems to happen on the backup destination is, the file is uploaded again, so it is present on "desktop" and "my document". (I have choosen the option to mark the deleted files on backup destination). Is there an option in order to avoid to upload twice the same file (replace it with a kind of link)...

4) Yes, I'm on the latest version

5) I test again tomorrow en send you logs.

Thanks again for all your informations!! -

Files replaced with older versions not backed upHello,

Does this mean that if I upload on my computer an old file from a mail or a usb stick or what ever without modifying it, it will not be backed up if the file is too old?

Thanks for sharing your science -

Realtime file backup on windows, moving file do not seems to be taken into account ?Update:

I did the following

- add a file in "my document"

- add a file in "desktop"

- "instant backup starts in 10 minutes"... I waited... but the new files do not get backed up...

I Stopped the backup job, re started it and then miracle:

- The moved file is deleted from desktop and added to my document

- the added fil in "my document" is backed up

- the added file in "desktop" is backed up

My questions:

1. Is there an option in real time backup to tell backup to take in account the new files?

Is it normal that the real time backup do not backup new files without stop/start the backup task?

2. Realtime backup is scanning every 15 minutes, this does not sound really real time... is it normal? Is there an option to get real real-time?

3. Moving file = adding a new file (double space, double traffic, double compression, double crypting) is there a way to optimise this? (I'm using the keep and mark deleted files)

If you have any remarks, expérience with one the 3 questions, I'm interested.

Thanks