Comments

-

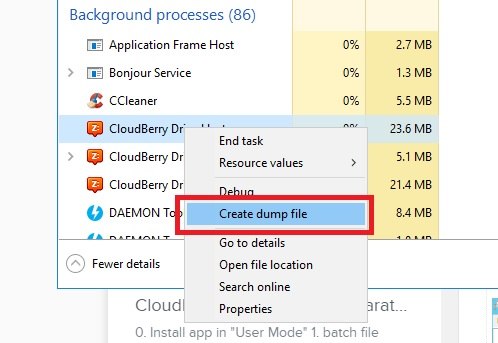

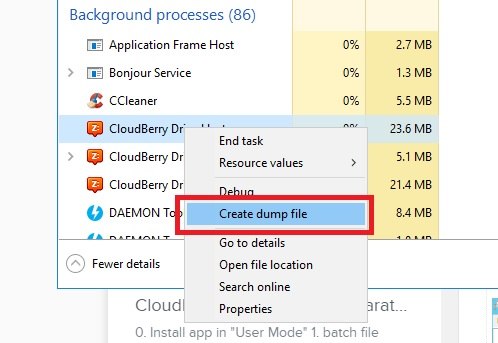

CloudBerry Drive Memory Leak?We would need a dump of the process after it begins to take additional resources. By dump I mean the file created after clicking on "Create dump file" (right-click on CloudBerry Drive process consuming a lot of memory) as it is shown on the attached screenshot. Most likely it will be too large to be attached in email, so you can upload it to a file-sharing service of your choice and send the link to supportatcloudberrylabdotcom

Please put "Drive memory leak. For Matt" in the issue description.

I'll forward this info to our developers.Attachment 1515611180103

(34K)

1515611180103

(34K)

-

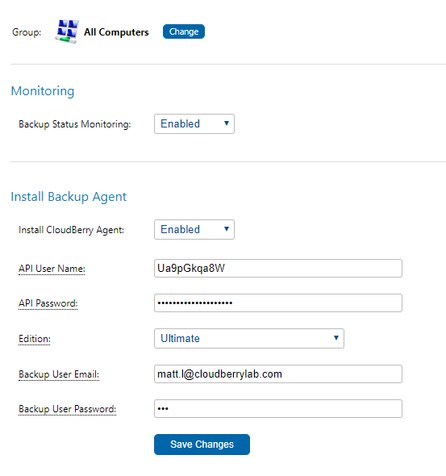

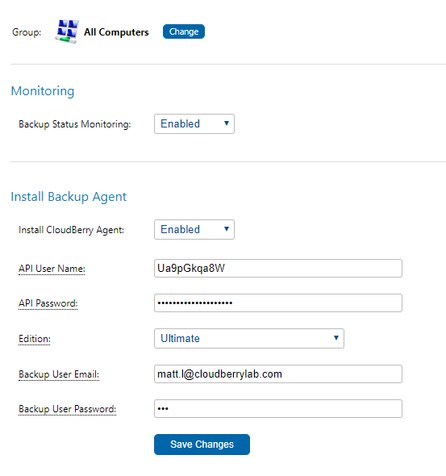

OptituneSeems like there is some kind of misunderstanding. You need to enter MBS credentials there. API creds are generated under the settings menu in MBS portal.

Here's an example in attachment.Attachment 2018-04-18 23_43_22-Settings - OptiTune Administration Console

(15K)

2018-04-18 23_43_22-Settings - OptiTune Administration Console

(15K)

-

OptituneEverything is configured on Optitune side, you just need to generate API credentials in MBS via the settings menu.

After that go to backup > settings in Optitune dashboard, enable "monitoring" and "install backup" options and there you can enter your MBS credentials.

Emails are configured in "Alerts" section. -

OptituneIt's been introduced recently, actually: https://www.msp360.com/resources/blog/mbs-now-works-optitune/

-

Refreshing Storage TabThat behavior is actually expected during consistency checks, since this process updates the info about data on storage side.

-

Refreshing Storage TabSorry, currently there is no way to speed up the process. I'll check in with our R&D and they'll see what can be done.

-

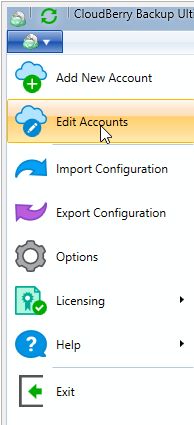

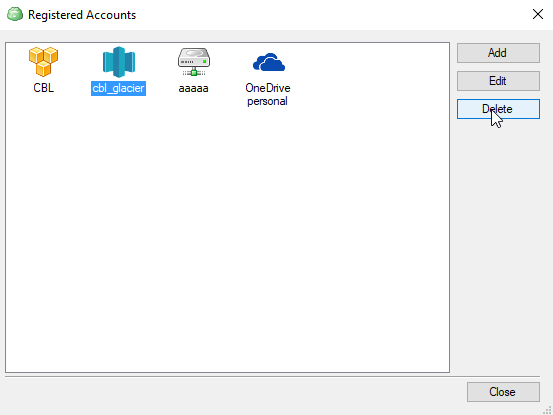

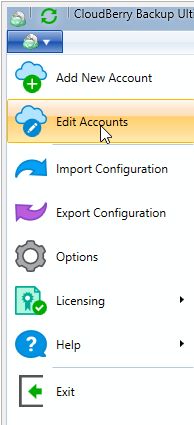

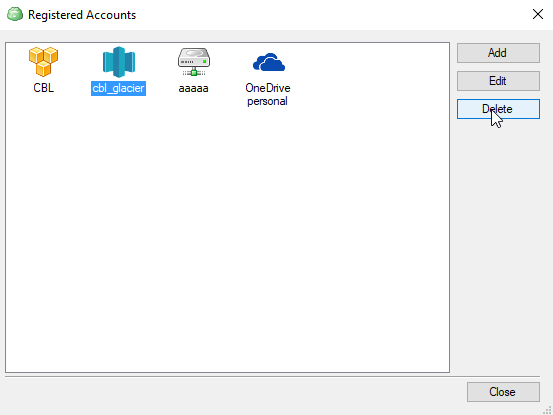

How to delete Backup Storage account?Regarding your Glacier and Google Drive accounts: To remove them from the software simply go to "edit accounts" menu, select them, and click "delete", like on the attached screenshots

Regarding clearing deleted files: go to tools > options > repository, select corresponding account and click "synchronize repository. Any actions outside of the software are not automatically registered in GUI, so you need to perform this operation in cases like that.Attachments 2018-04-14 13_58_43-

(17K)

2018-04-14 13_58_43-

(17K)

2018-04-14 14_00_34-Registered Accounts

(9K)

2018-04-14 14_00_34-Registered Accounts

(9K)

-

Backing up same files repeatedlyI presume you're referring to ticket #65504 which we've been working on with you.

Please make sure that your current path on source mirrors the one in the cloud and network share and synchronize the repository via tools > options > repository menu.

Regarding slow upload speed: I'm afraid that's the infamous "Rate Limit Exceeded" error from Google Drive. Google throttles automatic uploads on their side due to API limitations. -

Error on parsing json text: 'fU`1[System.Char]'In this output I can actually see that retrieval job for sourcearchive was completed successfully. That suggestion with one small file should help.

Such errors can happen when operation on Glacier side takes too long to complete and then expires. Expiration time is about 48 hours, from what I remember. -

Error on parsing json text: 'fU`1[System.Char]'Just for troubleshooting purposes I suggest to upload some small file to the same vault, wait 24 hours and then request a repository. Let me know if you'll be able to see the contents of the vault after the process is finished.

-

Backup fails on certain files.There could be several reasons for that error, including problems with Backblaze services and AV/FW issues. Usually this message is the result of some other issue, so it would be better to send the logs to support for proper log investigation.

-

Backup Failed - Total Size Limit ExceededYes, the limit is, of course, applied only to your cloud data.

Given the size of the data set you will be able to put 1 or 2 incremental backups, but then you will hit 1 TB limit. Full backups won't be uploaded since that would most likely exceed the limit.

If you think that there's something wrong with initial backup set you can safely delete it from the cloud and start from scratch.

In any case, with your current backup size it would be better to just upgrade to the Ultimate version, since that 2.7 TB disk won't stay half-empty forever. -

OSX - Files moved to new drive - any way to avoid new upload

If the only thing that's changed is the drive I suggest to download our Cloudberry Explorer(only for Windows machines), enter your cloud credentials and modify the cloud path so that it mirrors the one that is currently being backed up.

After that synchronize the repository in backup software following these steps:

1. Go to Settings - Application - Synchronize Repository.

2. Choose your account from dropdown menu.

3. Press Synchronize Now

After the process is finished the software should recognize the changes and will be able to continue backups from new source. -

Backup Failed - Total Size Limit ExceededI presume this is the first full backup you're performing. Let me know if that's not the case.

There are several points to keep in mind here:

1. The software can't determine the final size of the backups before compression, so you would only be able to keep 1 version of data on storage max, even in compressed state

2. The same can be said when backing up disks that are large in size but are half full, the final size will be smaller than the whole disk, but all of those operations are performed during the backup operation.

3. Currently purging is only possible upon successful full backup, so when you already have something in your bucket it won't be purged before new versions are uploaded.

So the first thing that I can suggest is to split that D drive into 2 logical ones. -

Backups failing w/ 'remote name could not be resolved'

It looks like something in the system is blocking connection to the web dashboard.

If you have any antivirus/firewall software please add these addresses/ports to exclusion list. -

Restore failure: One or more files don't exist on storageIn that case it would be better to open a case in our support portal. Just go to tools > diagnostic menu, put the details in the issue description and send us the logs for investigation.

-

Restore failure: One or more files don't exist on storageFirst of all you need to synchronize the repository on the machine where you're trying to restore the data. That should update the info about your data on storage and allow you to properly restore the files.

-

Restore failure: One or more files don't exist on storageIn that case it is most likely a repository issue since the software on one machine doesn't "know" about the changes made to the data on the other one, so synchronization should help.

Regarding RM service: please right-click on the service icon and select "start service", that's not relevant to the issue at hand, but should fix the problem with the service itself. -

Restore failure: One or more files don't exist on storageMost likely the local database is not synchronized with the storage.

Please go to tools > options > repository menu and synchronize the repository for your storage account. After that retry the restore operation. -

Insufficient quota to complete the requested serviceAre you copying your data to a network share? What OS are you using?

The issue can usually be observed on old Windows systems: https://social.technet.microsoft.com/Forums/windows/en-US/43a8805e-d3b3-4525-87b9-13d4547ce404/error-0x800705ad-insufficient-quota-to-complete-the-requested-service?forum=itprovistanetworking

First I'd suggest to try increasing your virtual/physical memory size and run backups during non-working hours, since the issue might be related to heavy load on the system. If that fails it would be better to send logs to support via tools > diagnostic menu.