Forum tip: Always check when replies were posted. Technology evolves quickly, so some answers may not be up-to-date anymore.

Comments

-

Dev Drive on Windows 11 (ReFS)

"- Item Level Restore is not supported"

Thanks for the reply.

That's a shame, and tells me I should create my ReFS dev drive not as a physical volume, but as a VHDX file on an NTFS volume. In that way, if I need to do item level restore, I'll restore the VHDX file, mount it, then pick out what I want to restore. I wouldn't be able to do this easily with a physical volume. -

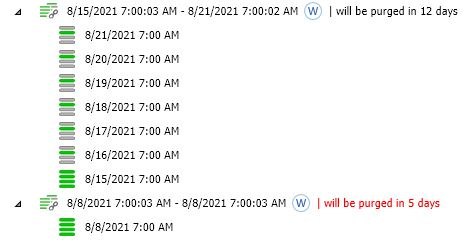

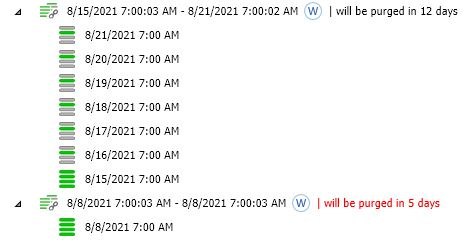

GFS Weekly Keeps Incrementals...and after the August 29th backup, all the incrementals in the first weekly fell outside the 14 day window, and they were all purged together, leaving just the base full backup which remains on the weekly retention schedule.

For space planning purposes, I need to plan for these "extra" incrementals that need to hang around a bit longer to maintain the chain. In the case of my plan, that's an extra six daily incrementals in the oldest weekly still needing to keep its chain to satisfy the 14-day overall retention.

-

GFS Weekly Keeps IncrementalsNow that I look at this again, it will be interesting to see what happens on August 29th, when all the incrementals in the August 8th weekly are older than 14 days. Maybe that's when they get purged.

I'm guessing now that the program can't purge earlier incrementals that are older than 14 days (like the August 9th one) because they're still needed in the chain to make the ones that aren't yet older than 14 days (like the August 14th one) still usable. -

New Format: Full Backups Only Daily or Monthly. Why no Weekly?Somehow, I didn't notice the link to this page:

https://help.msp360.com/cloudberry-backup/backup/about-backups/gfs/gfs-assignment-examples

...which gives me the long-term examples I was hoping for. This makes more sense to me now, but I'll still do some experimentation as to how the general retention period influences the GFS periods, but I think I have a better idea of where to start.

What I'm aiming for: I'd like a 2 week daily retention (general set to 2 weeks), a 4 week GFS weekly retention, and a 3 month GFS monthly retention. If I schedule daily incrementals with weekly fulls, I think this should work, knowing that the most recent 2 weeks of dailies will overlap those weeklies, but that's ok, because I want 2 weeklies beyond the 2 weeks of dailies. Stated another way, I want daily granularity for the first two weeks, weekly granularity for the following 2 weeks, and monthly granularity for the following 3 months. I'll give it a shot and look at the results in backup storage history. -

New Format: Full Backups Only Daily or Monthly. Why no Weekly?Thanks, David. I'm having one of those "why didn't I think of that?" moments.

I notice the default day when selecting "Daily" is Sunday. I recall in one of the help files that Sunday is considered by the software the day of the week for weekly retention.

So, if I select "Daily" only for Sunday, how do monthly backups get retained? Are they the nearest weekly full plus incrementals which comprise the first day of the month?

The help center file on GFS can be difficult to understand. More common-use examples which span what happens over many weeks/months would better illustrate how this works. -

BackBlaze ConfusionBTW - I'm still waiting for GFS. That will make retention semantics much easier.

-

BackBlaze ConfusionThat also makes sense. I have my backup jobs save the last 3 versions of backed-up files. I let MSP handle that aging (in other words, B2 doesn't do any file aging of its own). And it's possible that those old versions stretch back in time to when I was using version 5.X.

But in an emergency, if I need to restore files without using MSP (i.e. using B2's web client to get the files) it makes piecing together things a bit complicated. Hopefully, that disaster never happens. -

BackBlaze ConfusionThanks for the reply. And that agrees with my first using the program at 5.9.x, and upgrading to version 6 when it became available. So, if I follow you, can I purge that 5.x created directory on B2 (the smaller C: on B2) because 6.x only knows about and is managing the storage in C$? That would also make sense because I recently added backing up files from the Public directory to my backup plan after upgrading to 6.x which is why it's only listed under C$.

-

Replacing Windows Server Essentials Client PC BackupsThanks for the quick reply, especially on a weekend :grin:

Not sure which you're asking about, so here's both:

WSE does image backups, but the restore wizard allows you to restore an entire system, just a volume, or pick files and/or folders.

Right now, my current CloudBerry jobs to BackBlaze B2 are daily file backups of the key user file directories (Documents, Pictures, Music, Videos, etc.) which keeps the last 3 file versions and purges deletions after 30 days. Because BackBlaze is my 5th level backup (1. loss of file, 2. loss of disk, 3. loss of PC, 4. loss of house, 5. loss of bank vault (loss of city), The files are more important than OS images because the PCs and my WSE server are presumably totally destroyed in catastrophes 4 and 5. It keeps the cloud backups smaller because I'm not backing up the OS, but gosh, BackBlaze is so inexpensive. The total I store today in BackBlaze to backup 2 PC's and my WSE server shares is about 500GB costing me about $2.50 a month in storage. -

Backup of same file from two different locationsFriendly suggestion (which works for me):

Set up a storage bucket just for testing. Create test backup jobs that backup a small, toy, set of data (so the backups run quickly) but are rigged to exercise what you want to test (like your directory setup in your post).

Because these jobs and their data are isolated from my "production" data, I can run backup jobs to my heart's content to experiment with how various backup/restore job settings work. I take that learning and implement them in my production jobs.

Short summary: Not sure how something works? Run a small test job. -

Backup Plan configuration to minimize charges on Amazon GlacierI'll second this. I started out trying to use Glacier, and found it not very friendly for my home server & home network use cases. I found Backblaze B2 much easier to work with (you can access your files in real time and with a web client) and I think the storage costs are a bargain.

-

Best practise for first backup to harddisk and then switch to cloudI'm wondering what will happen to backups on cloud storage (like B2 in my case) that currently uses colons for their "special delimiter". Ideally, the change will not require fresh uploads of data. Any ideas yet on how you'll deal with data already backed up?

-

Restore from B2 snapshot copied to local driveHi Vlad,

This is excellent. And thanks for the quick reply. When I use BackBlaze's web client to look at my backup data in the bucket, I see the trailing colons. It looks like BackBlaze converts these to underscores when it creates the snapshot.

I ran two tests:

1) My toy snapshot was small enough for me to manually replace trailing underscores with dollar signs as a proof of concept. With some trial and error, I noticed the drive letter directory in my snapshot had two trailing underscores which both needed to be replaced with a single dollar sign for the restore to work (i.e. 'H__' to 'H$'). The restore worked as expected (The directory tree was what I expected, and all the files at the leaves were decrypted).

2) I tried your Powershell script on a fresh copy of the snapshot. I added an additional line at the top to deal with the double underscore suffix of the drive letter directory as follows:

Get-ChildItem -Recurse | Rename-Item -NewName {$_.Name -Replace "__$",'$'}

Get-ChildItem -Recurse | Rename-Item -NewName {$_.Name -Replace "_$",'$'}

Get-ChildItem -Recurse | Rename-Item -NewName {$_.Name -Replace "_acl",'$acl'}

The script worked great, and I was able to successfully run a restore job against the modified snapshot with the results I expected.

Woo Hoo! Thanks again. Knowing I can do this gives me more restore options if that disaster ever strikes.

Jon T.

P.S. This might make a good knowledge base article (if it isn't already) since I'm sure I won't be the only B2 user to want to try this.