Forum tip: Always check when replies were posted. Technology evolves quickly, so some answers may not be up-to-date anymore.

Comments

-

MSP portal SMTP does not support TLS it appearsWell, Matt. I have a problem now. It was working before on the MSP portal's SES. I only changed it because I wanted the emails to route through our 365 service going forward. Now that I realize, after the fact, that this isn't supported (hint hint--it would have been nice to read a blurb about what SMTP relay features are supported and what are not beforehand), I have a broken notification service. Please switch it back from the back end since I cannot do this from my side. Ticket Id : #254044

-

Clarification on versioningI understand that the controlling mechanism for retention as a whole is the Delete versions older than option.

-

Clarification on versioningPerfect. That's what I thought when I first configured this policy, but I was second guessing my assumption that all versions would be kept by default unless the Keep number of versions (for each file) option was specified.

-

Real-time backup use caseUnfortunately not. :( But I have yet to revisit David's latest suggestions.

-

Real-time backup use caseHey guys, me again with same goal of trying to squeeze every last drop of performance out of this use case. I am going with your recommendation of NOT using real-time backup but rather scheduled. David, I don't have an easy way to test the SAN speed, but I honestly don't believe that is the bottleneck as we are talking SAS disks in RAID10 with VMware VMs. I would like to focus on some other possibilities.

I'm still getting the following results on this file server. As you can see backing up 173 files to the tune of 1.16MB on 4/27/2019 to a local NAS took 7 hours! Backing up 720 files to the tune of 91.28MB to Azure took 13.5 hours.

So I increased the RAM and virtual cores provisioned to the file server. Now the VM has 8GB RAM and 6 virtual cores. However, I am seeing CloudBerry still not using all that is available, hence a likely bottleneck. Can you take a look at these settings and let me know what you recommend?

-

Real-time backup use caseData volume of a file server VM (VMware) with a SAN backend. Approx 1TB in files (document type).

-

Real-time backup use caseYeah, makes sense. How can I get the scan speed optimized? Today I enabled Fast NTFS option on the screenshot'd client above to see how that improves the situation.

-

Real-time backup use caseOk, so the reason why I was interested in real-time is because I have a similar client who is using File backup for a file server's data set. As you can see here, the scan still takes an enormously long time to only backup a few files! (The client has about a 100Mbps upstream, so that is definitely not a bottleneck.) I was hoping for an alternative route to avoid this lengthy scan time for the new use case.

-

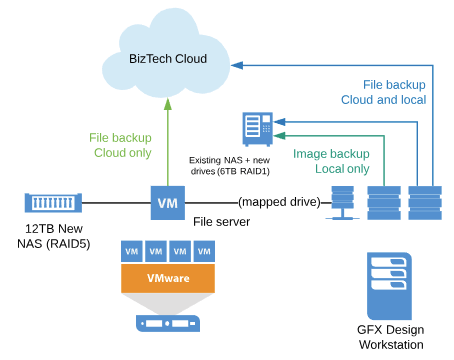

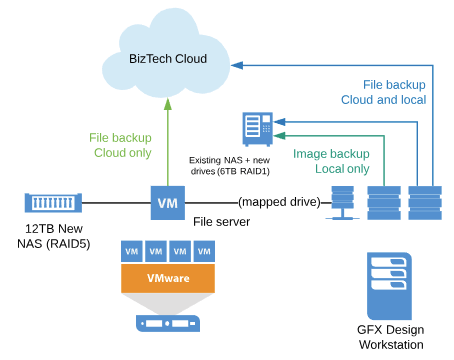

Missing Simple ModeHere is also a diagram of the actual setup that will be implemented. The dataset on the left (12TB) won't be fully utilized yet. The actual data is only 4TB in size, so the new repo is sized at 3x to last a few years growth.

The diagram also shows that a license will be provisioned on the VM that will house the iSCSI LUN with image data. And another license will be installed on the graphic designer's machine (right).Attachment chrome_2019-03-18_09-45-37

(21K)

chrome_2019-03-18_09-45-37

(21K)

-

Missing Simple ModeI was just using a license for my test PC to work through settings and make sure all was available that I expected to use.

-

Missing Simple ModeSure thing. Described over on this post: https://forum.cloudberrylab.com/discussion/783/real-time-backup-use-case . I was trying to keep the questions separate so as to avoid confusion. But the use case is the same.

-

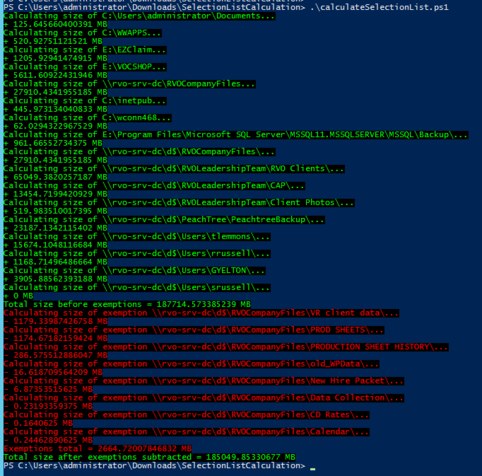

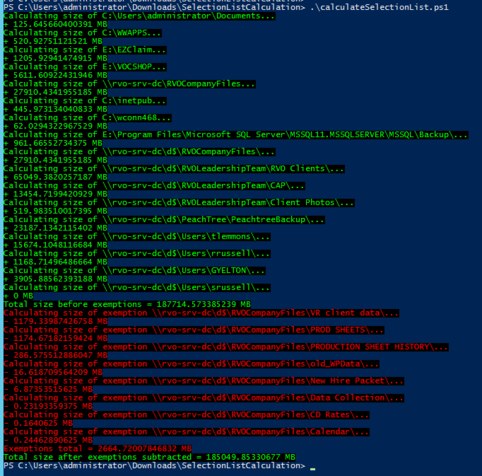

Selection list calculationOk, so I have bought some time on this... I created a Powershell script to read in two files: (1) a selectionlist.txt and (2) exemptionlist.txt . I was able to use the MSP > Plan Name > Backup Source boxes to feed into my two files. Then I ran my PS script which calculates the Include Paths and subtracts the Exclude Paths and gives me a sum total of space used by selection list.

Figure 1

I have attached the script in case anyone else needs this.Attachments ScreenConnect.WindowsClient_2018-08-10_09-56-32

(21K)

ScreenConnect.WindowsClient_2018-08-10_09-56-32

(21K)

calculateSelectionList.ps1

(1K)

calculateSelectionList.ps1

(1K)

-

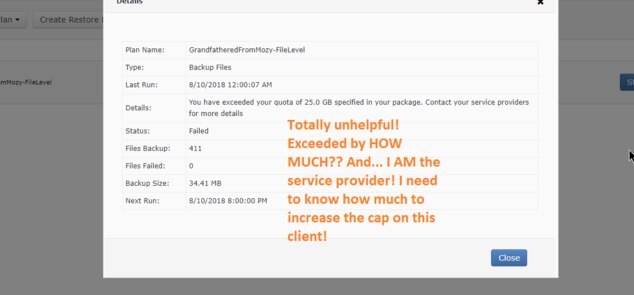

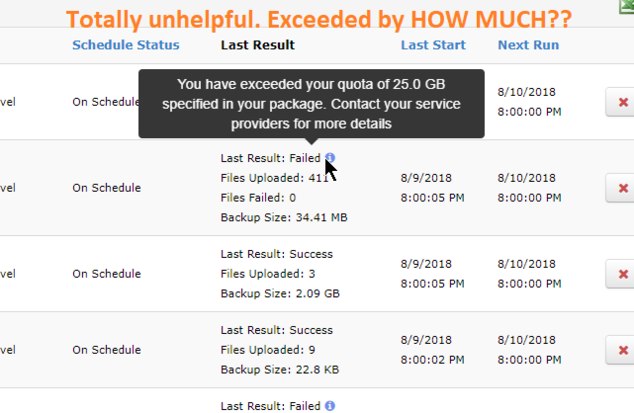

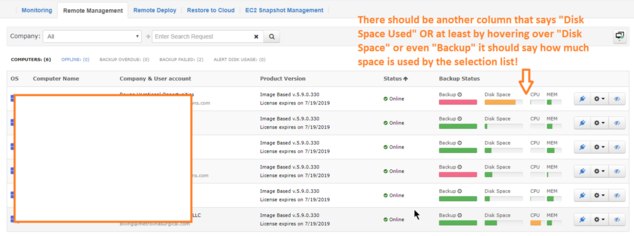

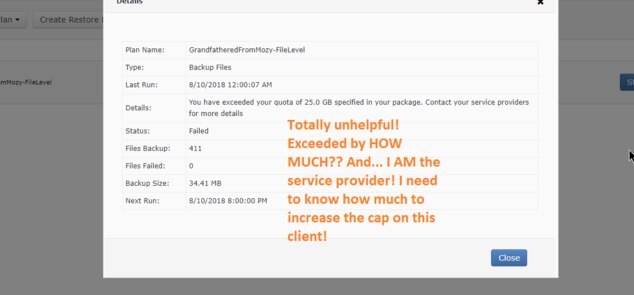

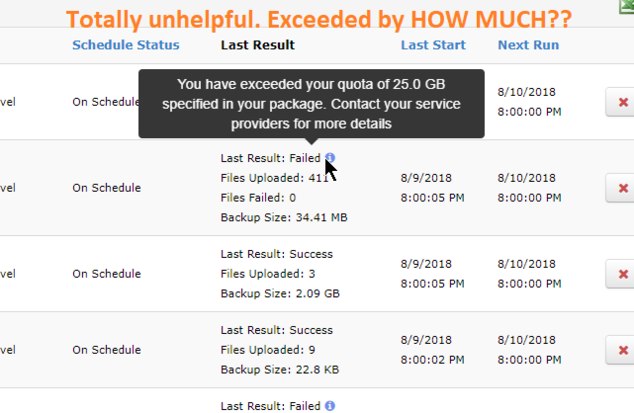

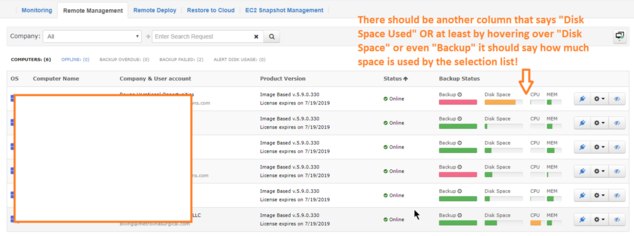

Selection list calculationThis needs to be HIGHEST priority. Case and point, I have a client that is assigned 25GB of cloud storage. Yet, they wanted to add some more folders AND some UNC paths to the selection list. Additionally, the UNC Paths include Exemptions, i.e. things NOT to backup. Obviously they are hitting the cap. So I need to answer a simple question--"How much more cloud storage do they need?" I started to use Windows Explorer > right click > Properties on each folder in the selection list and Calculator to manually tally up the total size of selection list. But then I got to the UNC paths, which I could also do this way EXCEPT for the fact the include dozens of Exemptions! So I would have to ADD first and then SUBTRACT all the Exempted folders and files. This is a NIGHTMARE. This needs to be resolved ASAP. I'm not asking for a beautiful "selection list analysis tool" that looks like TreeSize or WinDirStat. I am asking for a simple value SOMEWHERE on the mspbackups.com site, such as depicted below.

Example 1

Example 2

Example 3

Attachments chrome_2018-08-10_09-33-13

(29K)

chrome_2018-08-10_09-33-13

(29K)

chrome_2018-08-10_09-31-43

(23K)

chrome_2018-08-10_09-31-43

(23K)

chrome_2018-08-10_09-28-51

(222K)

chrome_2018-08-10_09-28-51

(222K)

-

Selection list calculationHey David, thanks for the response. The thing is, I can easily drill down into each folder, one at a time, using Windows Explorer too. But that is a hassle. Since CB is already aware of my selection list, there should be an easy tab or page or button for the software to do this work for me. It should tally up the current size of the selection list without requiring that I do any drilling down in, backing out, drilling down into another, backing out, all while recording said details in a spreadsheet to come up with a sum value. This is basic selection list meta data, aka total selection list size.

James Wilmoth

Start FollowingSend a Message

- Terms of Service

- Useful Hints and Tips

- Sign In

- © 2025 MSP360 Forum