-

Editing doesn't seem to workThat's a limitation of the Freeware version. With Trial and Commercial versions that should work without problems.

-

Changes to backup plan AFTER initial backup upload will require a new upload?

Some clarification regarding that:

When dealing with compression you would need to delete previously uploaded files on storage side manually and begin from scratch if you want your backups to be consistent regarding that. The same can be applied to the block-level option, but old backups will still be purged by your retention policy anyway, so in most cases you should be fine with that.

In case of encryption changes all of the previously backed up data will be overwritten upon the next backup plan.

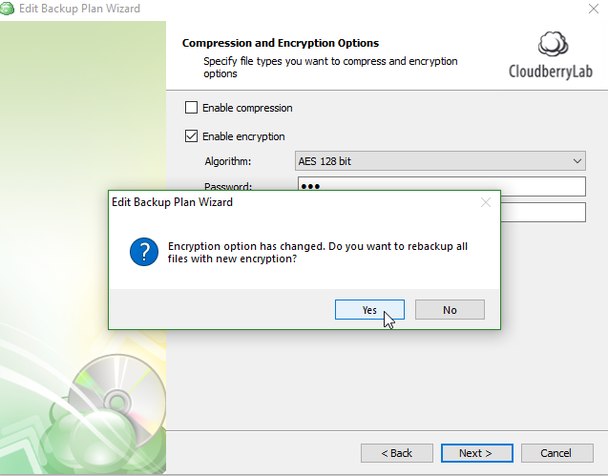

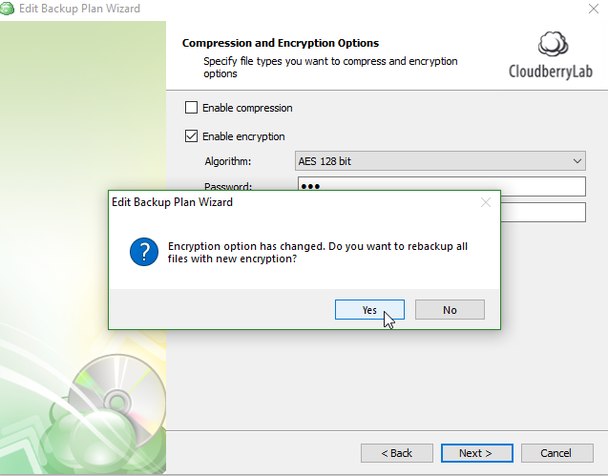

Upon changing encryption settings you will see a pop-up window like on the attachment in this message. Clicking yes will re-upload all previously backed up data with new encryption options.

Retention policy doesn't really play any role in encryption settings, it only deletes the files according to your parameters. -

Realtime backup only during specific time period?Hi!

Yes, regular scheduled backups will work fine and continue upload as intended.

We actually recommend using standard backup plans instead of the real-time ones, especially if you're backing up large amounts of data. -

Files in root directory not listed - Cloud (FTP) to local

Hi!

Yes, that's how Cloud to Local backups currently work. I'll file a feature request to our developers on your behalf to fix that. -

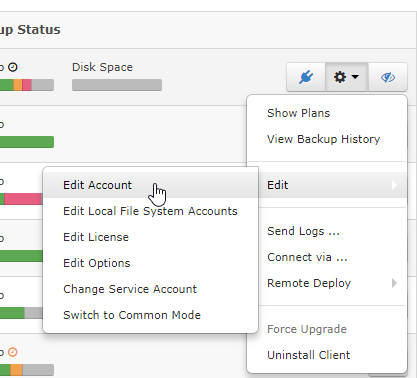

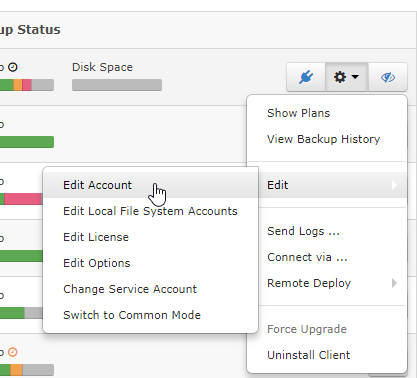

Can I change the installed module without removing and reinstalling the software?If you're on the latest version you can do that via your web dashboard.

Go to RMM > Remote Management, find the computer in question, click on the Gear icon, select "edit account" and in that menu you can change product edition.Attachment 2018-07-20 19_19_38-Managed Backup Service

(14K)

2018-07-20 19_19_38-Managed Backup Service

(14K)

-

Backup Plan Suggestions / Tips - RequiredRetention is based on the dependency between blocks and full backups, similarly to how SQL backups are based on diffs and fulls.

You can check the following articles regarding the topic:

https://www.msp360.com/resources/blog/backup-retention-policies/

https://www.msp360.com/resources/blog/scheduling-full-backup/

https://www.msp360.com/resources/blog/block-level-backup/ -

Retention Policies

1) This option refers to the timestamp you see in Windows Explorer for your files.

2) Always keep the last version means that if for some reason you have only 1 version of any file left on storage side it will never be purged.

3) "Keep number of versions..." option won't override the second setting, but can override the first one. Basically, if you reach the first threshold of 2 years the software will purge the data before 10 versions were generated on storage side. It will also start deleting the files if they are not yet 2 years old but more than 10 versions were created.

In addition, the option to delete files that have been deleted locally can override any settings above. -

Cloudberry linux CliAll of the backups are incremental by design, so only the files that were changed since the last backup run will be transferred to storage side during the next upload.

-

Backup Plan configuration to minimize charges on Amazon Glacier

If you often rename/move/delete your files it is better to disable "delete files that have been deleted locally" option. Or you can specify to delay the purge to 90 days if you need that option.

Regarding versioning: since early deletion fees are only applied if the files were deleted during the next 90 days after the files were uploaded you can specify to delete versions that are older than 90 days(or more), that way you won't be additionally charged for early deletion of your data. -

Backup Plan Suggestions / Tips - RequiredI think it depends on how often you plan to run your backups and if you use block-level functionality.

In your case it would be quite difficult to keep 5 months worth of versions with this setup, since you would be able to barely able to fit in 6 full backups on that disk.

The best choice would be to enable block-level backup and try running it with all the settings that I see on your screenshots. Keep in mind that purging will only happen upon new full backup, and it's actually better to run full backups more frequently than 5 months.

The most problematic point in your setup is the lack of space, of course, and since it's difficult to predict how much that data would take across several months it's equally difficult to suggest exact number for your retention policy. -

Wanna make sure i'm doing it correctYes, we're actually working on implementing that. No concrete ETA as of yet, but current target release is version 6.1. I've added you to the list of people to be notified when this feature becomes available.

-

Wanna make sure i'm doing it correctYes, that should actually work without problems if everything is performed correctly. Can't think of anything to add currently, since that setup is quite easy to configure.

If you want to perform off-site full image-backups effortlessly and quickly in the future the best choice would be to upload data to S3 using synthetic full option, that way full backups would be almost as quick as block-level ones after the first full upload. -

Featurerequest: Hyper-V BackupActually, if that VM is locally available in your network you can create a restore plan and specify a folder on your VM as a destination for those files.

-

Featurerequest: Hyper-V BackupCould you please clarify your use-case?

From what I understand you want to perform file-level restore from a VM backup into a VM itself without running the software on it and creating restore plan just using VM build installed on your host machine. Is that correct? -

Issue 5.9 - View BlockcontentMake sure you're using the latest version of the software and your repository is synchronized(tools > options > repository). If you still see the same issue it is better to send us the diagnostic logs for investigation via tools > diagnostic menu.

-

Moving files from one account to another is slowIt is better to troubleshoot such issues via our support system.

Please go to tools > diagnostic menu and send us the diagnostic logs for investigation. -

Pause Image Based Backup FeatureThat functionality is not yet available, but I've filed a feature request to our developers regarding that.

-

Best practise for first backup to harddisk and then switch to cloudThis will only affect new uploads to local destinations, the implementation itself is still being discussed, but you won't have to re-upload your whole data set.

-

SSL_ERROR_SYSCALL Error observed by underlying SSL/TLS BIO: Connection reset by peerIt is better to send us the logs via the Feedback menu for proper investigation of the problem.

-

Best practise for first backup to harddisk and then switch to cloudYes, that is a known issue. In one of the future updates we'll use $ signs for all types of storage destination universally to avoid problems like that. Currently the "adopt" feature is flawed.