Forum tip: Always check when replies were posted. Technology evolves quickly, so some answers may not be up-to-date anymore.

Comments

-

Seeding Image Based BackupIf you're using the so called "pure Glacier"(i.e. the one that uses Vaults) you won't be able to use any kind of seeding due to how it works. Note that restoration of image-based backups from Glacier could be a long and somewhat painful process, again, due to how this storage class works.

As for local backup precedence, we currently don't have anything like that, so the best way would be to simply use local backups instead of the cloud ones if upgrading internet connection is not an option. -

Seeding Image Based BackupSeeding image-based backup is possible, but it would still require full backups to keep versioning and purging in check. If you're using S3 or Wasabi it's better to use synthetic full in that case.

-

Basic block level vs file backupnever seen any kind of corruption of data during uploads, but we do MD5 checksum for all clouds, including B2.

As for consistency check, it updates storage usage in case the files have been changed outside of our software, nothing is uploaded or downloaded during the process. -

Searching for modified files is very very slowDifficult to say what's going on without seeing the diagnostic info, but that is most likely a problem we've been working on during the last couple of months. Updates 6.0.1 and 6.0.2 were supposed to fix it, but there will be more improvements in version 6.1, which should be released in the beginning of June.

-

Basic block level vs file backupWell, it's not really a "wrong" choice, if you enable it some of the larger files will benefit from it. You can enable/disable it any time, it won't affect your existing backups.

-

Cannot restore from S3 .cbl archiveYou need to use our software to restore any data that was backed up by it, so for further investigation it's better to send us diagnostic logs via tools > diagnostic menu, we'll continue investigation in the ticket system.

-

Basic block level vs file backupSFTP is considered to be a legacy protocol on our side, so I wouldn't recommend using it. It's provided "as is" with no guarantees.

As for the block-level backups, you're absolutely correct, you need to run full backups from time to time to make sure versioning and purging are consistent.

Full backup implies re-upload of any changed changed files, not the whole backup set. It works on a per-file basis, so unchanged files will not be re-uploaded.

Block-level option is mostly beneficial if you have large files or using image-based backups, for plans with small files you won't really notice any benefits. -

Multiple queries

Regarding your questions:

Search feature has not yet been implemented but we are already working on it.When i use online access to browse through the files, i am wondering how i can search for a particular file?

You can check that via Reporting > Capacity report or configure storage usage report to be sent to your email via Reporting > Scheduled ReportsHow do i see how much storage is used by a computer?

You can configure reports to be sent to you upon successful backup by enabling the corresponding option in Reporting > User Plan Report. Also, it's possible to check that via Monitoring section of the portal.How do i see the list of files backed up ? -

Searching for modified files is very very slowThat can be a problem with database indexation.

Performing repository sync after update should help. If that doesn't help you need to create a support ticket where we can discuss the problem in more details. -

Change Onedrive passwordCurrently you need to log out and then log in again with updated credentials to refresh them in our software. Authentication tokens are given only for one set of credentials.

-

Squid3 Proxy Configuration Tips?Thanks for posting!

Hope that some of our customers who use proxies will share their experience regarding that. -

Unable to restore VM backup from block level backupOk, I see you already created 2 tickets regarding that, let's continue conversation there.

-

Cannot send diagnostic dataYou can upload it to any file-sharing service of your choice and send the link in the same email. Will be looking forward to your feedback.

-

Cannot send diagnostic dataThat's exactly why you need to generate the log archive manually via instructions from my previous post and send it to us as an attachment.

-

One backup has two different destination foldersDid that happen after updating the software to version 6.0? We need diagnostic logs from the machine sent to us via tools > diagnostic menu to properly investigate that.

-

Searching for modified files is very very slowThanks for sharing your feedback with us.

Can answer your first 2 questions, but for proper investigation you need to create a support ticket by sending us the diagnostic logs via tools > diagnostic menu.

1) It all comes down to your PCs resources and certain parameters like HDD health and current system load

2) Repository updates might cause issues like that , but we would still need diagnostic logs from the machine for further investigation, like I mentioned previously. -

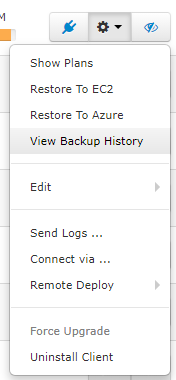

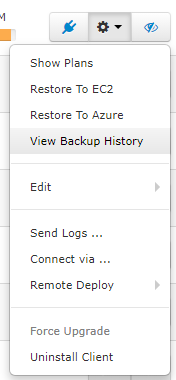

Search for file backup historyYou can go to RMM > Remote Management, click on the "gear" button and select "view backup history".

There you can sort the displayed information by files/plans and also use the search functionality. -

Why FILE NOT FOUND?Judging by the issue description, you're using Google Drive.

It's a known problem which is caused by their API. That is actually why we're moving away from this cloud provider soon, since it's not intended to be used for automatic upload operations.

I recommend switching to Google Cloud instead. -

Transfer backup data to another storage deviceSImply copying the data and synchronizing repository(tools > options > repository) would work.

In general, you need to do the following:

1) Copy your data from the old device to the new one manually

2) Attach your new device to the machine

3) Synchronize local repository via tools > options > repository once new destination is added. -

SQL query 'UPDATE cloud_files SET date_deleted_utc = ? WHERE parent_id = ? AND date_deleted_utcThat purely depends on our QA and we're currently preparing the build for release, so additional testing is still required.