Forum tip: Always check when replies were posted. Technology evolves quickly, so some answers may not be up-to-date anymore.

Comments

-

image restore verificationDid you select a new format backup? It does not work with legacy format plans. Once you select the new format, each plan will have a panel where you can select restore verification.

-

Legacy and new format backupYou are correct about the lifecycle policy behavior, I was asking if MSP360 backups would recognize the contents of the cbl files , old versions and all, such that I could “seed” 90 days worth of backups to the cloud that were originally on the local drive.

-

Legacy and new format backupThey are anywhere from 3-17GB each.

So this leads to my next question:

Lets say i have files with 90 days worth of versions backed up in these CBL files (new format) on my local drive. If i were to copy those files to Amazon using CB Explorer into the appropriate MBS-xxxxx folder, would a subsequent repo sync see them and allow me to "continue on" with a new format Cloud Backup?

Assuming that it would work, if i were to then use Bucket Lifecycle management to migrate CBL files to a lower tier of cloud storage, would every file contained in the CBL have to match the aging criteria?

Or is there some intelligence as to what gets put into each CBL file? -

Immutable BackupsThe strategy we use to protect our local backups.

- Put the backup USB HD on the hyperV host and then share it out to the guest VM’s.

- Use a different admin password on the hyperV than is used on the guest VM’s ( in case someone gets into the file server guest vm where all of the data resides).

- Do not map drives to the backup drive.

- Use the agent console password protection feature including protecting the CLI).

- Turn off the ability to delete backup data and modify plans from the agent console ( company: custom options). You can always turn it on/ back off as necessary to do modifications.)

- Encrypt the local backups as well somthat if someone walks off with the drive it ismof no use to them.

We also do image/ VHDx backups to the cloud and file backups to not one, but two different public cloud platforms. -

3-2-1 with the new backup formatIn a word, yes. It is a safe and effective way. It allows for flexibility in retention and in file format.

-

File Server best practicesCouple of questions:

- Where are you sending the backups to?

- What are the ISP uplink speeds of your clients?

- Are you using the HyperV version of the software? Or just using the file backup version?

We have several clients with large data-vhdx files. We run weekly synthetic fulls to Backblaze B2 and incrementals the other six nights.

The synthetic fulls take at most 10-12 hrs over the weekend. The incrementals are fast (1-2 hrs.)

But we have two clients with large data files and painfully slow upload speeds (5 mbps). For those it is simply not feasible to complete even a weekly backup over the weekend. We de-select the data vhdx for those clients.

Doing synthetic fulls each week will reduce the amount of time it takes as there are fewer changed blocks to upload compared to once per month.

We have been pushing clients to uplaod to higher speed plans/ fiber as it makes disaster recovery. Lot faster when we can essentially bring back their entire system from the previous day in one set of (large) VHDx restores.

If slow uplinks are the issue and they cannot be increased to say 25 up, then only upload the non- data VHDx’s to the cloud and rely on the file- level ( old format) for data recovery. I trust that you are doing file-level backups to tne cloud anyway so as to have 30/90 days version/ deleted file retention. -

Legacy and new format backupDavid- Great write-up explaining the differences. Can you help me get a feel for the reduction in object count using the new format? I use Amazon lifecycle mgmt to move files from S3 to Archive after 120 days ( the files that do not get purged after my 90 day retention expires). However, the cost of the API calls makes that a bad strategy for customers with lots of very small files ( I’m talking a million files that take up 200GB total). If I were to reupload the files in the new format to Amazon and do a weekly synthetic full, ( such that I only have two fulls for a day or so then back to one), would the objects be significantly larger such that the Glacier migration would be cost-effective?

-

Connection error Code: 1056Since upgrading to the 7.7.0.107 release I have had seven machines give me license errors or "an unexpected error has occurred". I worked around the license errors by switching, then switching back, the license type. Just installed the .109 release and it fixed the unexpected error issue.

-

Google Storage Price IncreaseIn any event, I am offering free consultation regarding backend storage cost as I am familiar with and use Wasabi, BackBlaze, Google, and Amazon.

-

Google Storage Price IncreaseThanks David,

My understanding is that the suspension of the transfer fees only applies to the bulk data conversion from another backend platform . Not sure why anybody would want to do that given the much lower cost of Wasabi and Backblaze.

I suspect very few people use Google, and in this case that’s a good thing. I just wonder how many people are using standard Amazon S3 for backups, instead of one zone IA - $.023 vs .$01. -

New Backup Format - Size comparison on the bucket vs legacyWe design our systems to have a hypervisor and a separate VHDx disk for the DC and two vhdx disks for the File server- one with the OS (C:) and the other with the data (D:).

Using the new format, we do nightly incrementals and weekly synthetic fulls of all VHDx on a given server to BackBlaze B2 ( not BB S3 as it does not support synthetic backups.) BackBlaze is half the cost of the cheapest (viable) Amazon price.

We also do legacy format file backups locally, and to a separate Cloud provider ( Google Nearline or Wasabi).

So a complete system restore in a disaster situation requires only that we restore the VHDx files, which is a lot faster than restoring the files individually. A synthetic full backup of a 2TB data vhdx file can be completed in 12- 15 hours each weekend - depending on your upload speed and the data change rate. The incrementals run in five hours tops each night.

So I suggest local ( one year retention- not guaranteed) and cloud legacy individual file backups ( 90 day retention guaranteed) for operational recovery, and new format VHDx/ Image backups for DR. We keep only the most recent full VHDx files and any subsequent incrementals. Each week we start a new set and the previous week’s set gets purged. -

Tracking deleted objectsIt’s more of a nice to have, To be able to see which files are going to get purged once the retention period for deleted files is reached.

the bigger issue is the one where we are required to restore all files that are flagged as deleted if we select” restore deleted files”.

We keep two years of deleted files on local storage so it would be nice to have a setting where we could specify “restore deleted files deleted before or after a specific date. -

New Backup Format - Size comparison on the bucket vs legacyWe use legacy format for file backups. It is incremental forever. No need to keep multiple "full" copies such as is required with the new format.

We use the new format exclusively for Disaster Recovery VHDx and Image backups. Now that we can keep a single version of the image/VHDx in the cloud, it has worked out great. We do daily incremental and weeky synthetic fulls to Backblaze which does not have a minimum renention period.

It makes zero sense to go with the new format for file backups unless you are required to keep say an Annual full, a Quarterly ful, etc. We have no such requirement. -

Tracking deleted objectsFor troubleshootng purposes, we would like to be able to see which files were deleted on any given day from the source. A report that shows files/folders deleted on a particular client machine on a certain day or range of dates would be great.

Does the backup storage tab have a different icon or a flag that shows a file/folder has been deleted?

Would be nice to get ahead of large accidental file/folder deletions rather than waiting foer the cient to realize a file/folder is missing.

And on a somewhat related note, has there been any progress on the true point in time restore model that we discussed a while back? -

Backup Agent 7.5.1 ReleasedBackup Fan

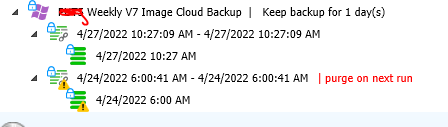

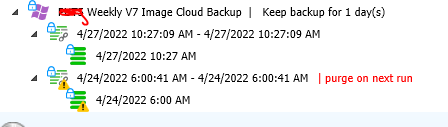

Summary: I believe that the major fix is to make the New Backup Format retention/purge settings work the way they were supposed to in the last release. When we run a synthetic full, and it completes successfully, the prior synthetic full can now be purged and we can end up with only one version/generation in cloud storage. I tested it and it works.

Detail:

We do weekly new-format synthetic fulls to BackBlaze B2 of all client VHDx and Images files. We only need the latest generation kept, but even though we set the retention to 1 day, the purge process did not delete the prior weeks full. This resulted in two weeks of fulls being kept, effectively doubling our storage requirements compared to the legacy format, which allowed us to keep only one version with no problem. The new format Synthetic fulls are such a massive improvement ( runtime-wise) that it was still worth it, but this is now fixed. -

How does CBB local file backups handle moved data?The reason that we use Legacy format for File backups, both cloud and local, is that they are incremental forever, meaning once a file gets backed up, it never gets backed up again unless it is modified.

The new backup format requires periodic "True full" backups, meaning that even the unchanged files have to be backed up again. Now it is true that the synthetic backup process will shorten the time to complete a "true full" backup, but why keep two copies of files that have not changed?

As I have said prior, unless you feel some compelling need to imitate Tape Backups with the GFS paradigm, the legacy format incremental forever is the only way to go for file level backups. -

Retention Policy Problem with V7I updated the server to 7.5 and made sure that my retention was set to 1 day (as it had been). I forced a synthetic Full Image Backup.

I expected that since it has been four days since the last Full backup, that the four day old Full would get purged, but that did not happen.

It is behaving the same as the prior version - It always keeps two generations, where I was expecting to now only have to keep one.

See attached file for a screenshot of the Backup storage showing that both generations are still there.Attachment PUT5

(9K)

PUT5

(9K)

Steve Putnam

Start FollowingSend a Message

- Terms of Service

- Useful Hints and Tips

- Sign In

- © 2025 MSP360 Forum